A level results day yesterday was a very unusual day. There has been a lot of comment, a lot of confusion, and a lot of ‘noise’. It’s not yet clear whether we’ve seen the final word on the subject from the government: it’s still possible that last minute amendments could be made to the process for generating grades, perhaps to parallel what has already happened in Scotland.

In this post, I want to question some features of the coverage of the day itself in newspapers and other media outlets, the nature of some of the responses I’ve seen to the headlines, and also to question some of the decisions the government and Ofqual the regulator have made. Suffice to say, the quality of comment that’s out there has been variable. Some thoughtful, lucid and careful analysis has appeared; other analysis has been crude, simplistic and misleading. The need for the former type of analysis is vital in a situation where a large number of young people find themselves confronting a really upsetting and difficult situation and (understandably) want answers.

The initial decision

Some words first on the government’s initial decision, back in March, to cancel the summer exams. From this everything else has flowed.

Certainly there were those (including me) who felt that this was a decision that needn’t have been made. In the recent words of Vicky Bingham, head of South Hampstead High school, ‘we could have managed exams. With a bit of imagination and planning we could have done it’. This reflects the thoughts of numerous teachers and leaders in education back in March.

In the place of actual exams, the government asked schools to produce teacher-generated grades (or, to use the jargon, CAGs – centre assessment grades). These were then to be subjected to a process of review and moderation by exam boards (overseen by ofqual, the exam board regulator).

Ofqual and the government could have offered some really clear advice to schools about how it would conduct the process of reviewing and moderating grades that schools submitted. Perhaps they could have done so along the lines suggested here. They didn’t.

A further crucial intervention that could have been made at this stage would have been to try to prevent a situation where pupils would miss university offers by dint of others’ best guesswork. Could special arrangements of some sort have been made for pupils in this situation? If so, what? One possibility would have been to ask universities to defer places for pupils in this situation until they’d had a chance to sit actual exams, either in November or next June. Or to ask them to hold open as many places as possible, regardless of exam outcomes. If this did happen, no one has heard of it.

It’s pretty clear to me that not enough thought went into trying to look after the interests of pupils in this category, to try to find something like a ‘fair’ route forward for them.

CAGs, Ofqual’s algorithm and Grade Adjustments

School-generated grades were always going to be a mixed bag. Some schools achieve very similar results year-by-year. Other schools can expect quite different results, depending on the cohort of students they have. Some schools have smaller year groups (where bigger year-by-year shifts are quite likely); some don’t.

Equally, some teachers and institutions – for various reasons – will have been quite generous with their pupils’ CAGs; others won’t. On the whole, generosity seems to have been more common. Back in July, Ofqual revealed that schools had submitted grades that were 12 percentage points higher than last year’s grades.

Some form of control on what was happening would be necessary, then, if we were not to see significant grade inflation. Or, to frame the matter in a different way, if we were not to see some pupils benefit from having generous/optimistic teachers, where others had had no such privilege.

Ofqual’s plan in dealing with the grades gathered from schools was to use statistical analysis and a specially configured algorithm with the data they had received. Crucially, schools’ exam performance in previous years would be factored into the analysis and used to generate a similar batch of results for the present year.

Ofqual’s use of its algorithm is described, with clarity and in detail, here in (of all places) the Daily Mail. What we have ended up with, as a result, is an overall picture that looks pretty similar to the picture we’ve seen before in recent years.

The risk of sudden grade inflation, then, has been avoided. But the changes needed to achieve a familiar-looking picture have been considerable, and they have created needless upset.

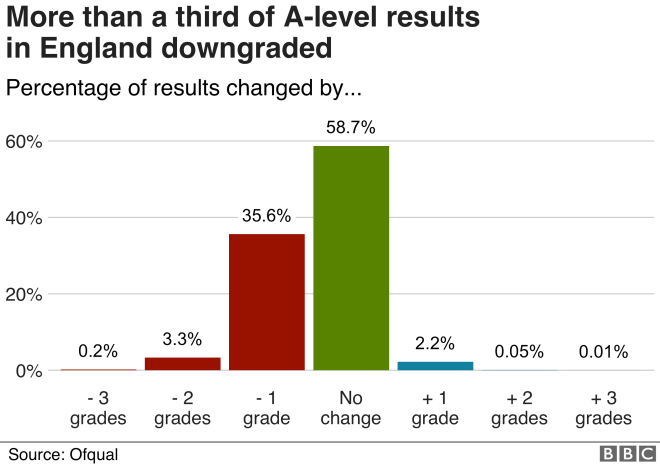

An article on the BBC website reports how CAGs as a whole have been affected by ofqual’s interventions. The graph below shows the overall picture and speaks for itself:

A lot of press reporting has focussed on the fact that high-performing (outlier) students in schools where results are mostly low or average have been badly affected by the (mainly downwards) adjustments that have been made. If you are at a school whose pupils rarely achieve top grades, and your teachers have (justifiably) predicted you e.g. straight A*s, you are less likely – once an adjustment is made by Ofqual’s algorithm – to receive your teacher’s predictions than a pupil at a school where results are very often high. There is a nice case in point of this precise situation here.

To compensate for this sort of situation, Ofqual could have created a clear, straightforward appeals process, as suggested by Sam Freedman in this excellent Twitter thread here. The government could perhaps even have made special arrangements for such pupils to sit their exams in June or July. It didn’t. Instead, it’s been left to universities to pick up the pieces, making ad hoc judgments about the students who find themselves in this situation. Some students, inevitably, have been left disappointed. And some universities/courses/colleges have been more generous than others in responding to the situation.

For the vast majority of students who have experienced it, being downgraded must feel a very bitter pill to swallow. Students have been able to find out the grades their schools submitted on their behalf. For those who have not received those grades, especially if this means missing out on a university place, I feel incredibly sorry. My big feeling is that all the problems we have seen could have been obviated earlier in the year if the government had simply been determined that, come what may, all pupils would sit their exams.

How pupils have been affected: by school, by background

A further feature of the press reporting of the A level debacle has focussed on differences in outcomes between pupils in different types of school, and of different socio-economic backgrounds. Clearly there are some slight differences in the likelihood of having your teachers’ grades changed, depending on these factors. But to talk of ‘bias’, as some commentators have done, in my opinion profoundly distorts the issue.

The table below illustrates the issue in relation to school type:

The first table summarises adjustments made by school type. A big part of the commentary claiming there has been ‘bias’ toward independent schools in Ofqual’s policy has hinged on the statistic highlighted in yellow: 4.7% more A/A* grades were awarded to independent school pupils this year than last. There are several things to say here, and perhaps I’m particularly inclined to say them as someone who works in the independent sector.

First, that the independent school sector is diverse. Inevitably some schools will have done very badly out of what’s happened, and their statistics won’t reflect the overall trend. Others will have done well. A school that sees large variations in year-by-year performance, with a particularly good year group this year (with GCSE results to match), for example, may not have done well out of Ofqual’s review process. Put simply, many pupils at independent schools won’t have been advantaged by what has happened this year at all.

Second, although 4.7% seems a clearly higher figure than the other figures in the table, when seen as a proportion of A*/A grades awarded, it’s in fact not much out of keeping with the other figures. It represents an 11% increase in A*/A grades. For secondary comprehensive pupils there was a 9% increase in these grades, for selective secondary schools it was 3%, for sixth form/FE it was 1.4%, for academies it was 7% and for ‘other’ institutions it was 13%. So independent school pupils have done slightly better on the whole, but the 4.7 figure isn’t the outlier it might seem in the table when the statistics are seen in proportion.

Third, as some in the commentariat have pointed out, independent schools are more likely to have small class sizes than other types of school. For small class sizes, Ofqual has said that there is a problem applying its algorithm: the data pool is too small. As a consequence those with small class sizes have been more likely to receive their teacher-allocated grades. A further word here on the existence of these small classes, which some commentators have described as sites of privilege, of advantage. Well, another way of seeing them is just as places where subjects which are not currently as faddish as the most popular A level choices are being taught.

Fourth, it may just be that teachers in some schools – e.g. selective secondary schools and Sixth Form/FE colleges – submitted CAGs that were less optimistic for their pupils. If so, Ofqual should have adjusted for this more than they have done.

Below is a summary of outcomes for pupils of different socio-economic backgrounds:

The bullet points above the table are worth reading. On the face of it, it perhaps looks as though students of ‘Low SES’ have been more harshly treated: 10.42% of their CAGs have been downgraded, as opposed to 9.49% of medium SES and 8.34% from high SES backgrounds.

However, a) teachers of low SES pupils, as the table says, are more likely to over-predict (this can be borne out from historical data); b) they have actually achieved more highly this year (74.60% achieving C or above) than in either 2018 or 2019.

It has been a similarly good year for those in the Medium SES category, 78.20% of whose CAGs have been awarded C+, a better statistic than for either 2018 or 2019.

Only those in the High SES category have done worse than was managed in 2018, though they have done better than was managed in 2019.

So, when looked at carefully, ‘bias’ here, or in relation to school type, is difficult to detect. Of course, it ought to go without saying that ‘bias’ toward one or another type of school is something it would be bizarre to find a regulator doing.

The individual and the system

From my point of view, as I’ve said above, the real argument about these A level ‘results’ ought to have been happening in March. It’s all very well Keir Starmer today condemning the ‘disaster’ of this year’s results (and he isn’t wrong), but where was he earlier in the year, when he should have been pointing out the entirely predictable problems we’ve seen?

For a certain type of newspaper columnist, and for a certain type of twitter commentator, everything we’ve seen over the past two days points to ‘systemic bias’, to social inequality, and to entrenched injustice. ‘The whole [exam] system’, as one senior academic puts it, ‘is not to recognise individual merit or attainment, but to maintain its own credibility in the eyes of big business and pundits, and to sort the population according to predetermined criteria and maintain the status quo’. Some would say that’s a quite extraordinarily cynical way of seeing things.

But a less extreme version of this strand of opinion has been widely endorsed by numerous public figures. This claims simply that this year’s results have been ‘biased’, or that, in the words of the historian Peter Frankopan, the results have been ‘devastating for social equality/mobility’. Or that, in the words of Andy Burnham, ‘the government created a system which is inherently biased’ against FE and Sixth form colleges.

In my view, when looked at carefully, the tables considered in the section above highlight the obvious problems with perspectives like these, where the only ‘bias’ I can detect is in the attempt to seek broadly to replicate the results of previous years.

What is clear to me is that an opportunity has been seized to make some political capital out of the situation, by suggesting that the government has been biased against those of lower socio-economic backgrounds. On this occasion, though, the data simply doesn’t bear that out. And it’s almost as though some people are so intent on foisting their social theories and political assumptions on the data that it doesn’t really matter how badly those theories and assumptions actually tally with the facts.

A more productive way forward, surely, would be to emphasise that this has been a fiasco that has touched many people – pupils, teachers, parents, schools, universities – across the whole education sector, and across all sections of society; that very many pupils have been adversely impacted through no fault of their own; that proper plans and support should be put in place for those pupils who want to sit their exams; that now is not the time to play political football with young people’s lives and aspirations, but to try to respond to the situation without reaching for extreme judgments.

It’s good to see, for example, that Worcester College Oxford has promised to fulfil its offer of places to all who received one. I wonder about the practicalities/good sense of all universities/colleges doing this, but it’s certainly a very decent gesture.

Meanwhile, if there is to be a wide-ranging and trenchant critique of the socio-economic forces at play around A level assessments, it would be nice to hear it done thoroughly, accurately, and at a really opportune moment: like, for example, in March.

Spot on. Curious (if I may) to know what you make of this: there seems to be a lack of recognition that as much as exams are about individual achievement, to a large extent that achievement lies in its relationship to other people’s results. Not just within the 2020 cohort, but with past and future cohorts too. If we lose that comparability through dramatic grade inflation, we are undermining a large part of the reason why pupils sit exams in the first place. Pressure now needs to be on a swift and fair appeals process, as you say, and definitely not on the Scottish option.

LikeLiked by 1 person

Thank you, and thanks also for commenting! Yes, I agree with that re: other people’s results, and I think some pupils/university students are quite keenly aware of that fact, while for others it doesn’t much matter. And yes, agree also that comparability with previous year groups matters for lots of reasons. Let’s hope some sensible arrangements get put in place for appeals etc soon!

LikeLiked by 1 person